Google Spent 40 Minutes Explaining Why I Can't Delete My Own Data

Inside the impossible maze of corporate gaslighting that defines our surveillance economy

The following excerpts are from my November 2025 customer service chat with Google Workspace support; names, contradictions, and corporate doublespeak included.

Google Workspace Support, Karlapudi: “Google doesn’t own your or your Organization Data Leif. [...] You being the owner and admin of this you hold the highest control over the data.”

Leif Kolt: “Yet they will have access and control when they delete it in 3 months […] For the record: You’ve said the data would auto-delete in 3 months, but also that Google can’t access or delete the data at all. These statements are mutually exclusive.”

This is what “user control” looks like in 2025. Karlapudi spent forty minutes explaining to me that I can’t delete my own data while simultaneously insisting I have complete control over it. It’s the kind of circular logic that would make a used car salesman blush, except he wasn’t trying to sell me anything; he was trying to convince me I already had the control I was desperately trying to exercise.

What started as a simple data deletion request became a masterclass in corporate gaslighting, revealing something far more sinister than one company’s customer service failures. This conversation is a window into the fundamental lie that props up our entire digital economy: the myth that we control our data.

The Impossible Maze

Let me walk you through Karlapudi’s logic, because it’s important to understand how these mental pretzels get twisted. When I asked to delete my Gemini AI chat history immediately, he explained that Google “does not have authority over data and data retention settings,” but in the same breath assured me the data would be “automatically deleted in 3 months.”

When I pointed out this contradiction (how can Google automatically delete data they claim no authority over?) he pivoted to a new explanation: it’s like when “you decide to delete a file and permanently deleting it from trash will be automated.” Except I wasn’t asking Google to automate anything. I was asking them to delete it now.

Each time I cornered Karlapudi on a contradiction, he’d introduce a new excuse that contradicted the previous one. First, Google couldn’t access the data. Then they could access it, but only I controlled it. Then the deletion was automated by my settings, but I couldn’t actually trigger that automation myself. It’s an impossible maze where every path to data deletion is blocked by another corporate policy masquerading as a technical limitation.

The most telling moment came when I asked him directly: “Is Google claiming they technically cannot delete this data, or are you choosing not to delete it?” Karlapudi’s response was pure corporate poetry: “As Google Workspace support, we are trying to inform you that after being the platform to manage your organization, as an Admin we have given the option to turn on and off since the start.” A masterclass in saying absolutely nothing while using a lot of words.

The Big Lie

Here’s what Google wants you to believe: you’re in control. Their privacy policy promises users can “delete your information.” Their marketing campaigns celebrate data freedom and security. Karlapudi spent our entire conversation insisting I had “the highest control over the data.” It’s a beautiful story, wonderful for marketing, and it’s complete bullshit.

“Google doesn’t own your or your Organization Data Leif. [...] We as Workspace can only provide a platform to manage your organization by yourself and you being the owner and admin of this you hold the highest control over the data.” - Google Workspace Support, Karlapudi

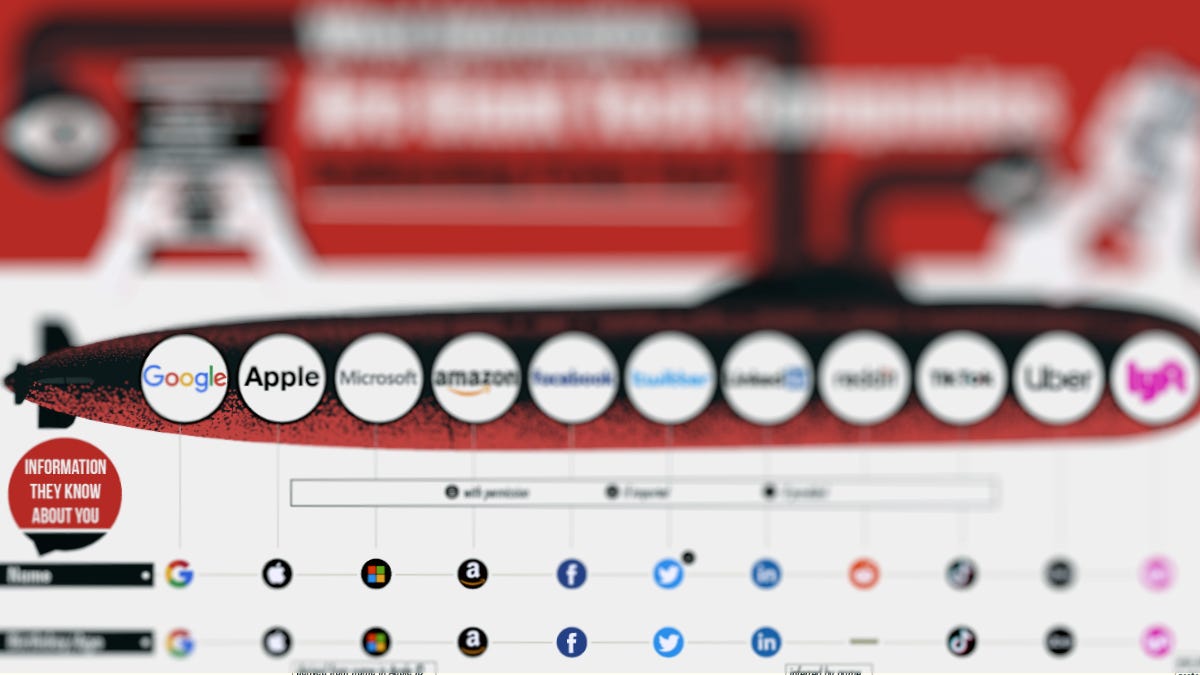

This isn’t just Google’s lie, it’s the foundational myth of the entire tech industry. Apple built a billion-dollar brand around “privacy by design” while creating one of the most surveilled ecosystems in human history. Every app, every click, every pause while you read this sentence gets cataloged, analyzed, and monetized. But hey, you can turn off location services in settings, so you’re totally in control, right?

Microsoft rebranded their surveillance capitalism as “productivity insights.” Meta calls their data harvesting “connecting people.” Amazon’s Alexa is just a helpful assistant that definitely isn’t recording everything you say (except when it is, whoops, our bad). Even Spotify knows more about your emotional state than your therapist, tracking not just what you listen to, but when you skip songs, how long you pause between tracks, and whether you’re the type of person who listens to sad music at 2 AM.

The pattern is always the same: promise control, deliver surveillance, then gaslight users when they try to exercise the rights they were promised. It’s not a bug in the system, it’s the entire business model. These companies aren’t selling products to you, they’re selling you as a product; to advertisers, data brokers, and anyone else willing to pay for insights into human behavior.

The “user control” narrative serves one purpose: providing legal cover while they strip-mine our digital lives for profit.

The Data Harvest

“We are only here to help you with settings and processes as per what all you have signed up for.” - Google Workspace Support, Karlapudi

Most people think they understand what data gets collected. They picture targeted ads based on their Amazon searches, maybe some location tracking from Google Maps, soft-core conversation listening. They have no idea how deep this rabbit hole goes.

Your smartphone knows when you’re depressed before you do. It tracks how fast you type, how long you hesitate before sending texts, even the slight tremor in your hand when you’re anxious. Dating apps don’t just know who you swipe right on; they know how long you stared at someone’s photo, whether you zoomed in, and what time of day you’re most likely to make impulsive romantic decisions.

Smart TVs record conversations even when they’re “off.” Your car tracks everywhere you go, how fast you drive, how hard you brake, and sells that data to insurance companies who adjust your rates accordingly. That fitness tracker counting your steps? It’s also monitoring your sleep patterns, heart rate variability, and sexual activity, then sharing insights with data brokers who sell “wellness profiles” to employers and health insurers.

But here’s where it gets truly dystopian: they’re not just collecting what you do, they’re predicting what you’ll do next. Google’s algorithms know you’re pregnant before you tell your partner. Facebook can predict divorce with at least 60% accuracy. Amazon knows you’re going to buy something before you know you want it.

Streaming services don’t just track your music, they analyze your listening patterns to determine your mental health status, political leanings, and likelihood to make major life changes. That innocent playlist you made? It’s a psychological profile being sold to the highest bidder.

The scope isn’t just invasive, it’s totalitarian. Every digital interaction becomes data, every data point becomes profit, and every profit motive becomes a reason to collect more.

How We Got Here

This didn’t happen overnight. The surveillance state we’re living in was built brick by brick, with each crisis providing cover for the next expansion of corporate data harvesting.

September 11th gave us the Patriot Act and normalized mass surveillance “for our safety.” The 2008 financial crisis made everyone desperate for free services, so we traded our privacy for Gmail and Facebook accounts. Then smartphones put a tracking device in every pocket, and we called it convenience.

“You have been amazingly patient and understanding throughout,” Karlapudi told me after forty minutes of circular logic.

But the real turning point was Cambridge Analytica in 2016. For a brief moment, the curtain was pulled back and people saw how their data was being weaponized to manipulate elections. Facebook stock dropped, Congress held hearings, everyone was outraged. Then... nothing. No meaningful legislation. No structural changes. Just some privacy policy updates and promises to “do better.”

The tech oligarchs learned a crucial lesson: they could weather any scandal as long as they controlled the narrative. So they hired armies of lobbyists, funded think tanks, and perfected the art of performative privacy theater. Apple’s “What happens on your iPhone stays on your iPhone” campaign launched right as they were building backdoors for government surveillance.

Now we’re in the endgame. AI models need massive datasets to function, and these companies have spent decades collecting exactly that. Your conversations with Karlapudi aren’t just customer service interactions, they’re training data for the next generation of AI systems that will make decisions about your creditworthiness, employment prospects, and social credit score.

We sleepwalked into digital feudalism, and now the lords of Silicon Valley are building the infrastructure for total social control. The timing isn’t coincidental either, as Trump and his authoritarian allies gear up to weaponize government power against dissidents, they’ll inherit the most sophisticated surveillance apparatus in human history, built and maintained by the very tech companies that claim to protect our privacy.

The Oligarch’s Playbook

Let’s talk about the men behind the curtain, shall we? Because while Karlapudi was busy explaining why I can’t delete my own data, his bosses were probably yacht-shopping with the profits from selling it.

Elon Musk bought Twitter to “save free speech,” then immediately turned it into a propaganda machine while harvesting user data for his AI ventures. The same guy who warns about AI dangers is feeding every tweet, DM, and deleted draft into his Grok chatbot. It’s like an arsonist starting a fire department; one hell of a magic trick, but the audience always gets burned.

Mark Zuckerberg testified to Congress with a straight, and borderline reptilian, face about protecting user privacy, then spent $100 million building a bunker in Hawaii. Nothing says “I trust the world I’m creating” quite like a fortified compound stocked with enough supplies to survive the societal collapse your business model is careening towards.

Jeff Bezos stepped down from Amazon to focus on space travel, because apparently strip-mining Earth’s data wasn’t enough; he needs a whole new planet to surveil. Meanwhile, Amazon’s Alexa devices are recording family conversations and Ring doorbells are building facial recognition databases for police departments. But hey, at least your packages arrive fast.

These aren’t tech visionaries, they’re digital robber barons with better PR teams. They’ve convinced an entire generation that surveillance is innovation, that privacy is selfish, and that resistance is futile. They speak in TED Talks about “connecting humanity” while building systems designed to exploit our psychological vulnerabilities for profit.

The playbook is simple: promise utopia, deliver dystopia, then gaslight anyone who notices the difference.

What They’re Really Building

I have a 3-year-old and a 5-year-old. My wife and I have made the deliberate choice to keep devices out of their daily lives. They might get to draw on my tablet as a special privilege, or giggle at face filters occasionally, but that’s it. No YouTube binges, no apps, no digital babysitters. We’re trying to give them a childhood that isn’t mediated by algorithms.

But I know we’re fighting a losing battle (unless things change), because the world they’re inheriting is being controlled by billionaires and designed to make that choice impossible.

They’re growing up in a reality where their tablet drawings get uploaded to cloud servers, analyzed by AI for developmental markers, and cross-referenced with family data to build predictive profiles. The baby monitors we used when they were infants weren’t just helping us sleep better, they were training voice recognition systems that will follow these kids for the rest of their lives.

By the time my children are adults, the surveillance infrastructure being built today will be so embedded in society that privacy will seem as quaint as rotary phones. They’ll apply for jobs through AI systems that know their porn preferences, their mental health history, and whether they’ve ever googled “how to quit your job.” Their credit scores will factor in their social media posts, their dating app behavior, and whether they buy name-brand or generic groceries.

This isn’t science fiction; it’s the logical endpoint of the systems we’re building right now. Social credit scores are already being tested in pilot programs, and the norm in China. Insurance companies are buying data from fitness trackers and social media platforms. Employers are using AI to screen job candidates based on their digital exhaust.

“We’ve gone full circle. You’ve created an impossible maze where every path to data deletion is blocked by another excuse,” I told Karlapudi.

The oligarchs aren’t just collecting data, they’re building the infrastructure for a world where human autonomy becomes impossible. My kids deserve better than digital serfdom. All our kids do.

Fighting Back

Here’s the thing that keeps me up at night, and the thing that gets me out of bed in the morning: this isn’t inevitable.

Every time you choose Signal over WhatsApp, you’re voting for encryption over surveillance. Every time you choose open-source alternatives over corporate platforms, you’re refusing to be the product. Every time you cover your laptop camera or leave your phone at home during a sensitive conversation, you’re exercising the muscle of digital resistance.

But individual action isn’t enough. We need to organize like our democracy depends on it, because it does. Join a digital rights group or form one locally. Talk to your friends and family, despite their glossy eyed assumptions that your tin foil hat is too tight (I assure you it’s not.) Support organizations like the Electronic Frontier Foundation and Fight for the Future. When your representatives vote on tech legislation, make sure they hear from constituents who understand what’s at stake.

Most importantly, we need to stop accepting the premise that this is just how the world works. There’s nothing natural or inevitable about surveillance capitalism. These are choices; choices made by billionaires who profit from our digital exploitation, enabled by politicians who either don’t understand the technology or don’t care about its consequences as long as they get paid.

We must choose differently. We can demand technology that serves human flourishing instead of corporate profit. We can build systems that enhance our autonomy instead of undermining it. We can create a digital future where my kids grow up with actual control over their data, not just the illusion of it.

“Thanks for the chat transcript. It’ll make an excellent example of why current data deletion policies need reform,” I told Karlapudi at the end of our conversation.

The conversation with Karlapudi wasn’t just about deleting chat history, it was about whether we’d accept a world where corporations gaslight us about our most basic rights. I refuse to accept that world. I refuse to leave that world to my children.

The oligarchs are counting on our resignation, our learned helplessness, our willingness to trade freedom for convenience. But every act of digital resistance, every moment of organized opposition, every refusal to accept their gaslighting as gospel, that’s how we take back control.

Not in three months. Not when it’s convenient for Google’s retention policies. Now.